Projects

Ongoing Projects

Funded by ERC (2025-2026)

In the world of AI, understanding causation is vital, especially in fields like healthcare and finance, where decisions impact lives and economies. Current AI models often lack transparency, relying on opaque methods that make it challenging for experts to verify or contest findings. Addressing this gap, the ERC-funded CArLA project aims to revolutionise causal discovery–the task of uncovering causal relationships from data. Building on robust methodologies and explainable AI (XAI) principles from the ERC ADIX project, CArLA’s platform will make causal discovery more transparent, interactive and contestable. The project will develop demonstrators in healthcare and finance, enabling experts to influence and verify AI-generated insights, thus fostering a more reliable, human-aligned AI approach for high-stakes decision-making.

Funded by ERC (2021-2026)

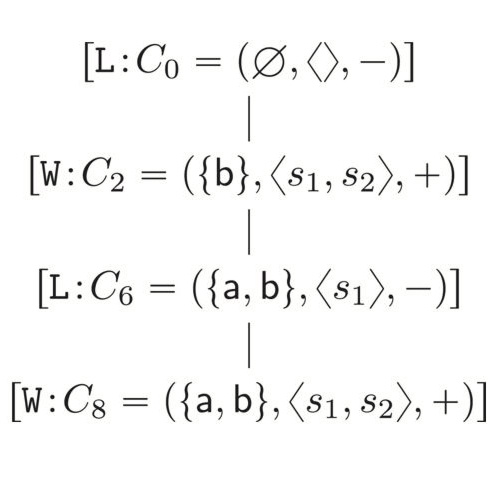

ADIX strives towards a radical re-thinking of explainable AI that can work in synergy with humans within a human-centred but AI-supported society. It aims to define a novel scientific paradigm of deep, interactive explanations that can be deployed alongside a variety of data-centric AI methods to explain their outputs by providing justifications in their support. These can be progressively questioned by humans and the outputs of the AI methods refined as a result of human feedback, within explanatory exchanges between humans and machines. This paradigm is being realised using computational argumentation as the underpinning, unifying theoretical foundation.